Research

Past projects

Past projects

Networking

There is a number of project related to high-performance networks and their applications. Some parts of the networking are also covered in the Virtualization, Multimedia distribution and processing, and Self-organizing systems sections.

- Efficient distribution of data on the optical layer – photonic multicast.

- Scalable user-empowered synchronous data distribution.

- CoUniverse – self-organizing component-based collaborative environment.

- Low-latency high-defintion and post high-definition video transmissions: UltraGrid and UltraGrid2K.

- VirtCloud – network virtualization for clouds and grids

- DiProNN – programmable network node with VMs support

- VirtLan – network virtualization for educational purposes

Photonic multicast

In collaboration with the Optical Networks activity of the CESNET NREN and CzechLight we have proposed a principle of reconfigurable optical multicast device — a CzechLight Multicast Switch. The result of this collaboration is an utility model of the device as well as a pending patent.

Active network elements

For number of years, we’ve worked with active programmable networks and user empowered data distribution. Some of the projects and results include:

- programmable reflector for user-empowered data distribution (meaning that the data is distrbuted without priviledged access to the network resources, as is the case to set up multicast, for instance); a production implementation of this concept is RUM2

- active element networks and distributed active element for efficient and scalable distribution of data both with respect to number of stream and bandwidth of a stream

DiProNN – programmable network node with VMs support

The Active/Programmable networks allow the end users to inject customized programs into special network nodes, making them able to let their data being processed (in the way they want) directly in the network as it passes through. This approach has been presented as a reaction to a certain fossilization of the traditional computer networks, which on the one hand behave as a simple and extremely fast forwarding infrastructure, but which on the other have not been designed for fast and dynamic reconfigurations and novel services‘ deployment. Multimedia application processing (e.g., videoconferencing, video transcoding, video on demand, etc.), security services (data encryption over untrusted links, secure and reliable multicast, etc.), intrusion detection systems, and dynamically adapting Intranet firewalls are just a few possible services, which could be provided.The fundamental issues, which have to be addressed by all the architectures, are:

- Execution Environment Flexibility – the active/programmable nodes have to provide an execution environment (EE), inside which all the user active programs (APs) are processed. Ideally, the nodes should be able to accept and run the user-supplied APs designed for an arbitrary EE, which will provide the highest flexibility possible.

- Resource Isolation and Security – for security purposes, the running APs have to be strongly isolated from each other, so that a malicious/compromized AP cannot affect another APs sharing the same HW/SW resource(s) nor it can directly affect the simultaneously running APs themselves.

We claim, that these issues could be essentially addressed by making use of the virtualization techniques. The virtualization, properly combined with the other useful concepts, then allows us to propose a very flexible and powerful programmable node, which allows its users to develop their active programs for arbitrary execution environments and dynamically compose them into complex processing applications. Besides the execution environments‘ flexibility, the employed virtualization makes the proposed node further able to provide higher security and strong isolation capabilities, additionally enhanced by robust resource reservations and guarantees.

Contributions:

The set of features provided by the proposed DiProNN node (DiProNN = Distributed Programmable Network Node) could be summarized as follows:

- The built-in execution environment flexibility – the active programs, which can be processed by the node, could be provided for multiple arbitrary execution environments the node supports.

- Execution environments‘ uploading – once the execution environments, which are provided by the node, are not suitable for the users because of any reason, they are able to upload their active programs encapsulated in their own execution environments, which the APs should be processed in.

- Component-based programming – to simplify the node programming, the node is able to accept user sessions consisting of multiple single-purpose cooperating active programs (components) and data flows among them defined on the basis of the workflow principles. The sessions‘ workflows could by dynamically adapted to changing conditions and, as results from the previous items, each component (AP) might be further designed for a different execution environment (provided by the node or uploaded by the user).

- Parallel/Distributed processing – to make the node capable of processing higher amounts of data, its architecture is based on commodity PC clusters, allowing both parallel and distributed processing of the user sessions. The APs, which are intended to run in parallel, do not have to be adapted in any way to make such a processing possible.

- Complex resource management and QoS support – to provide a different priority to different applications, users, or data flows, or to guarantee a certain level of a processing performance to a session, the node provides complex resource management capabilities, which allow the users to specify the resources required by the particular APs processing their sessions.

- Strong APs‘ and resources‘ isolation – for security purposes, the running APs are strongly isolated from each other, so that a malicious/compromised AP cannot affect another APs sharing the same HW/SW resource(s) nor it can affect the simultaneously running APs themselves. Such a strong isolation also eliminates a hidden influence among the APs and ensures, that the APs cannot compromise each other in other way than through the network. Once the APs are strongly isolated, the accounting of the resources‘ utilization might be performed as well.

- Mechanisms for fast APs‘ communication – since the processing components might want to communicate with each other (e.g., because of an internal synchronization and/or state sharing), and since such a communication should be provided as fast as possible, the node allows the definition of the control communication channels among the APs, which are provided by a specialized low-latency interconnect. The APs themselves do not have to be aware of these interconnects during their development, so that they do not have to be adapted to a particular interconnect the different nodes‘ implementations use.

- Virtualization system-independent architecture – the node architecture is neither designed for a particular virtualization system nor for a specific kind of them. It uses common networking techniques only, which allow the node to be built upon almost any existing virtualization solution.

Publications:

Scheduling

The laboratory focuses on various aspects of scheduling, namely in context of distributed systems.

- Job scheduling for Grid systems.

- Data streams scheduling for real-time media-based communication.

- Scheduling for asynchronous multimedia processing as a part of DEE.

Contacts:

- Assoc. Prof. Hana Rudová, hanka@fi.muni.cz

- Dalibor Klusáček, Ph.D., klusacek@ics.muni.cz

ALEA grid simulator

This work concentrates on the design of a system intended for study of advanced scheduling techniques for planning various types of jobs in Grid environment. The solution is able to deal with common problems of job scheduling in Grids like heterogeneity of jobs and resources, and dynamic runtime changes such as arrival of new jobs.

Alea Simulator is based on the latest GridSim 5 simulation toolkit which we extended to provide a simulation environment that supports simulation of varying Grid scheduling problems. To demonstrate the features of the Alea environment, we implemented an experimental centralised Grid scheduler which uses advanced scheduling techniques for schedule generation. By now local search based algorithms and some policies were tested as well as „classical“ queue-based algorithms such as FCFS or Easy Backfilling.

The scheduler is capable to handle dynamic situation when jobs appear in the system during simulation. In this case generated schedule is changing through time as some jobs are already finished while the new ones are arriving.

For further details, please follow the ALEA webpage.

Multimedia Processing

Distributed Encoding Environment

Distributed Encoding Environment (DEE) is a distributed system that allows efficient large-scale asynchronous media processing. It uses distributed parallel file systems in order to achieve good performance. The DEE concept was used to prove nice theoretical scheduling properties. Morover, its practical prototype implementation is used since 2004 at Masaryk University to process lecture recordings in a large scale.

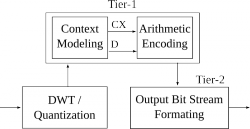

JPEG2000 encoding on GPU

The aim of the project is GPU accelerated implementation of JPEG2000 for purposes of real-time high-definition video compression or fast compression of large scale images.JPEG2000 is an image compression standard created by the Joint Photographic Experts Group (JPEG). JPEG2000 is aimed at providing not only compression performance superior to the current JPEG standard but also advanced capabilities demanded by applications in the fields such as medical imaging, film industry, or image archiving. It features optional mathematically lossless processing, error resilience, or progressive image transmission by improving pixel accuracy and resolution. On the other hand, the advanced features and the superb compression performance yields higher computational demands which implies slower processing.

Attracted by their raw computing power, a number of general-purpose GPU computing approaches has been implemented in recent years, including GLSL, CUDA, and OpenCL. Because of its flexibility and potential to utilize power of GPUs, we have opted for CUDA—a massively parallel computing architecture designed by NVIDIA. Modern GPU architectures are designed to run thousands of threads in parallel.

Fig. 1: Block diagram of JPEG2000 encoder

The input to EBCOT is transformed using Discrete Wavelet Transform (DWT) and optionally quantized. Image data is then partitioned into so called code-blocks. Each code-block is independently processed by context-modeling and arithmetic MQ-coder modules in Tier-1. The context modeller analyzes the bit structure of a code-block and collects contextual information CX which is passed together with bit values D to the arithmetic coding module for binary compression.

The JPEG2000 on GPU is now developed by Comprimato.

Virtualization

Contact person: Tomáš Rebok, xrebok@fi.muni.cz

VirtCloud

VirtCloud is a system for interconnecting virtual clusters in a state-wide network based on advanced features available in academic networks. The system supports dynamic creation of virtual clusters without the need of run-time administrative privileges on the backbone core network, encapsulation of the clusters, controlled access to external sources for cluster hosts, full user access to the clusters, and optional publishing of the clusters. A prototype implementation has been deployed in MetaCenter (Czech national Grid infrastructure) using Czech national research network CESNET2.

For more information, see http://www.cesnet.cz/doc/techzpravy/2009/virtcloud-design/.

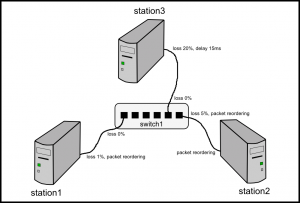

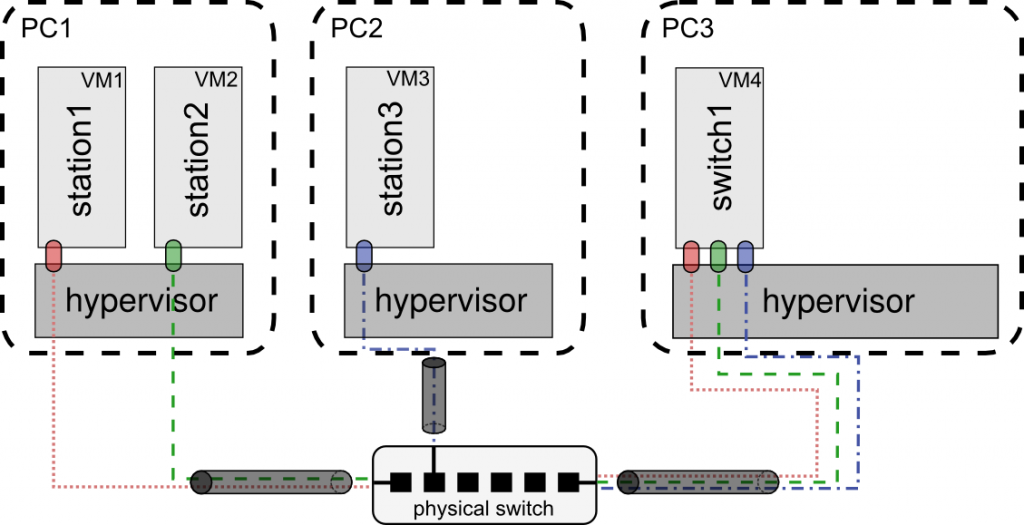

VirtLan – network virtualization for educational purposes

There are two most common methods in situations, when there is a need to practically educate students in the area of computer networks and/or to perform some tests of network applications/protocols – appropriately equipped network laboratories or network simulators. While the network laboratories provide the most realistic sensation of the real networking nature, it lacks the scalability feature (in the sense of the number of students able to use it simultaneously) and provides limited flexibility (overcomed by financial/time investments only) and a difficult emulation of specific network states (packet losses, packet latencies, packet reorderings, etc.). On the other hand, the network simulators provide very high scalability and flexibility, as well as they easily allow the emulations of specific network states. However, the sensation of the real networking nature, including the possibilities of real-like network protocols/applications testing, is poor.

The goal of this project is to combine the positives of both the approaches and to propose and implement a novel network emulator framework allowing the definition of a virtual network (topology, network components, etc.) by the use of virtualized infrastructure – all the network components are provided by virtual machines (including the virtual network switches providing real-like switch controlling system) and the virtual network links are provided by point-to-point 802.1Q over 802.1Q virtual links – thus providing the following set of features:

- the definition of various network topologies

- in the users‘ VMs (virtual computers/servers), the possibilitity to use all the user-defined top layers including the L3 ISO/OSI layer (the IP layer) – the emulator operates up to the L2 ISO/OSI layer

- the possibilities to connect to all the defined network components (virtual computers/servers as well as switches) using a console and/or common connection methods (SSH, RDesktop, VNC, telnet, etc.)

- the definition of various virtual network links‘ parameters (including emulated latency, jitter, packet reorderings, links‘ failures, etc.) as well as the possibilities to use pre-defined network links‘ parameters (fiber links, metal links, wireless links, etc.)

- the possibilities to define triggers and timed events in order to increase the debugability and reproducibility of the tests

- and many others.

Fig. 2: An illustrated conversion of the example topology (Fig. 1) on the real virtualized infrastructure (including the illustration of the internal communication).

Haptic interactions

One of the key areas for human-computer interactions is haptic interaction. The research in the laboratory includes haptic models and their computations in the parallel and distributed systems.

- Haptic deformation modeling.

- Acceleration of haptic models using GPU architectures.

Contacts:

- Igor Peterlík, peterlik (at) ics.muni.cz

- Jiří Filipovič, fila (at) ics.muni.cz

- Jan Fousek, izaak (at) mail.muni.cz

Haptic deformation modeling

The real time physically-based deformation modeling is of a paramount interest mainly for the design and implementation of surgical simulations. Here, accurate and realistic behavior is necessary for the model to be suitable for the surgical training. On the other hand, the model must be rendered inside the haptic loop running at high refresh loop (above 1 kHz) to ensure the stability of the interaction.

Contribution

The contribution has been published in several papers. It can be summarized as follows:

- Proposal of technique based on off-line precomputation and approximation allowing for real-time haptic interaction with non-linear models of soft tissue.

- Proposal of an extension to the precomputation technique allowing for on-line construction of the data needed for the approximation.

- Design of new distributed algorithms for both off-line and on-line construction of data using message-passing interface.

- Proposal of improvement concerning the approximation procedure based on three different techniques of interpolation and proposal of extrapolation mode in case the data are not available for the interpolation.

- Specification and analysis of simulation parameters determining the response forces observed during the interaction with soft tissue.

- Convergence analysis of geometrically and/or physically non-linear models of soft tissue w.r.t. large number of deformations.

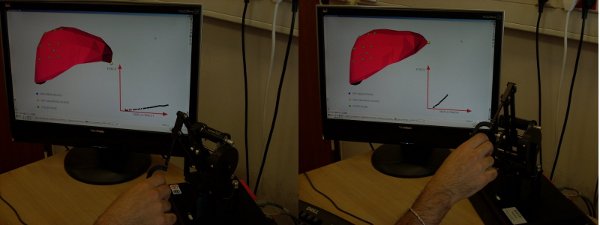

Demonstrations

Two screenshots of the application developed in cooperation with Mert Sedef at Robotics and Mechatronics Laboratory, Koc University, Istanbul:

Publications

- I. Peterlík. Efficient precomputation of configuration space for haptic deformation modeling. In Proceedings of Conference on Human System Interactions, pp. 225–230, IEEE Xplore, 2008. best paper award. [PDF]

- I. Peterlík and L. Matyska. Haptic interaction with soft tissues based on state-space approximation. In EuroHaptics ’08: Proceedings of the 6th international conference on Haptics, (Berlin, Heidelberg), pp. 886–895, Springer-Verlag, 2008. [PDF]

- I. Peterlík and L. Matyska. An algorithm of state-space precomputation allowing non-linear haptic deformation modeling using finite element method,” in WHC’07: Proceedings of the Second Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, (Washington, DC, USA), pp. 231–236, IEEE Computer Society, 2007. [PDF]

- J. Filipovič, I. Peterlík and L. Matyska. On-line precomputation algorithm for real-time haptic interaction with non-linear deformable bodies, in Proceedings of The Third Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environments and Teleoperator Systems, pp.24-29, 2009 [PDF]

- I.Peterlík. Haptic Interaction with Non-linear Deformable Objects. PhD Thesis, 142 pages, 2009 [PDF]